ChatGPT for Developer Productivity

Introduction

In today’s world of software engineering, crafting tests has become a vital companion to writing code. This is due to the rapid pace at which code evolves, especially within agile development methods. With practices like shared code ownership and continuous integration, automated test cases play a crucial role. They not only ensure that all existing code functions correctly, aligning with the developers’ intentions, but also serve as guides when passing on the code to fellow developers for future work. The dual responsibilities of creating functional code and its corresponding tests must match the speed of innovation and the accelerating release cycles.

However, the race to decrease lead time — measured from when a task is selected for work to its actual release — cannot be won solely by churning out more code at a faster rate. The solution lies in automating repetitive tasks. This smarter approach paves the way for efficiency, allowing more time and effort to be directed towards innovation and other value-added activities.

Taking a Deeper look

When a user story is picked up by a developer from the sprint backlog, the first thing looked at from an analysis perspective is the Acceptance criteria. Code for the feature should be written in such a way that the acceptance criteria are met all the time. Frameworks such as Cucumber can help to automate Behaviour Driven Development (BDD) code, which helps to assert that the newly written code is meeting the acceptance criteria. This is all good. However, starting to write these tests from a blank slate requires effort and sometimes includes that effort of finding similar code to customise for the current task. What if we could search for a template to get started and move ahead more quickly?

Let’s say the software engineer spends 4 or 5 days writing the code and the acceptance tests pass. Now is the time to check-in the code. However, it needs to go through a PR (Pull request) that triggers a step to peer review the code before the code could be merged into the develop branch. Everyone’s usually busy working on their own code within a scrum sprint, and certainly, an in-depth review of a PR can take a significant effort on the part of both the code reviewers and the code author. Effort needs to be spent on understanding the requirement, the context, the assumptions and determine if there are any logical errors with the code that if not rectified could lead to a potential issue in production. The coding standards also need to be adhered to as well as ascertaining the fact that the code is professionally written. This includes looking out for how well the code is written, that it can handle the edge cases, and generally that the new code doesn’t degrade the overall standards, performance, security, and quality of the existing code. This effort, while beneficial, often does not get tracked in terms of effort expended. Most of the time, developers want to get done with this step as soon as possible, and considering the time constraints, are sometimes willing to lower the bar a little.

Unless following an approach of Test Driven Development (TDD), tests tend to be written after the code has been written. Often the bare minimum unit tests are written to sustain a minimum level of test coverage. However, the key here is to cover all the flows in the code and validate how the code handles all the potential input parameter values. Broader the gap here, more the chances of getting bugs in the code. Testing all possible scenarios can be hard work, and when time is a constraint, some of the tests could get skipped.

Listing the Problems

We have three underlying problems identified from the preceding section:

- There needs to be better ways to quickly get started with writing automated BDD test cases to meet the acceptance criteria.

- There needs to be a better way of making peer code reviews more effective.

- Test cases need to be auto-generated and in manner that reveals software bugs or prompt the software engineer for a conversation with the product team to clarify scenarios.

Let’s explore how chatGPT could provide solutions to these problems.

Automated BDD testing

We can go prompt by prompt at chat.openai.com with a logical sequence of prompts, making sure we are getting the expected response from chatGPT.

First, we start with this prompt:

- Let us assume we have the following acceptance criteria for a user story:

With nothing else provided, this prompts chatGPT to ask for additional inputs.

We then supply acceptance criteria and then get a further result from chat GPT:

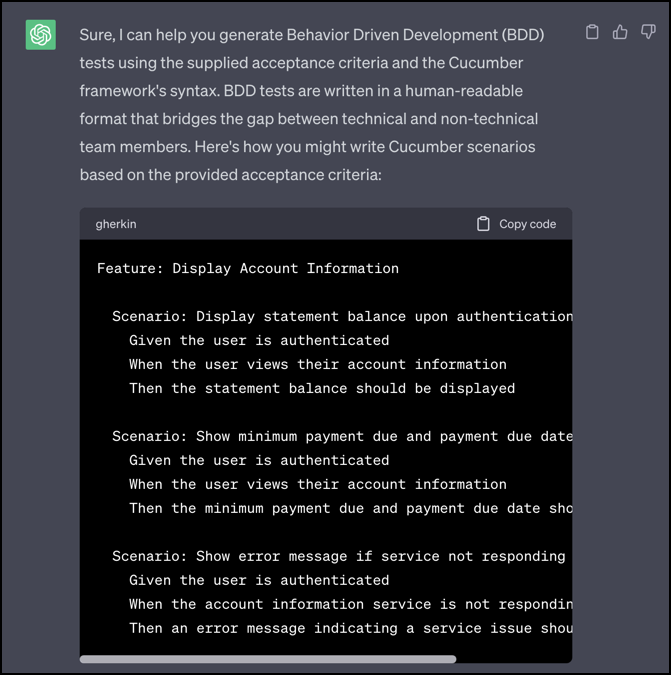

Now, let’s go for the code generation section. ChatGPT generates the feature file, which specifies how the system under development is expected to behave under varying scenarios.

Full listing here:

Feature: Display Account Information

Scenario: Display statement balance upon authentication

Given the user is authenticated

When the user views their account information

Then the statement balance should be displayed

Scenario: Show minimum payment due and payment due date

Given the user is authenticated

When the user views their account information

Then the minimum payment due and payment due date should be displayed

Scenario: Show error message if service not responding or timeout

Given the user is authenticated

When the account information service is not responding

Then an error message indicating a service issue should be displayedThis continues further into the output of the steps definitions via Cucumber step definition code in Java.

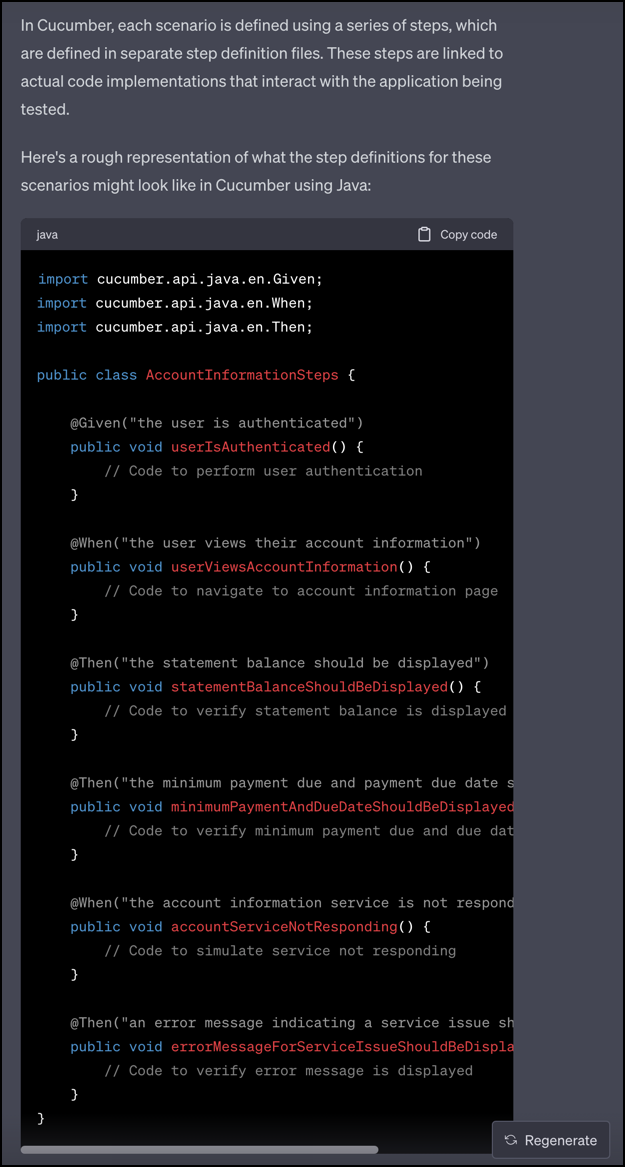

Full Source code:

import cucumber.api.java.en.Given;

import cucumber.api.java.en.When;

import cucumber.api.java.en.Then;

public class AccountInformationSteps {

@Given("the user is authenticated")

public void userIsAuthenticated() {

// Code to perform user authentication

}

@When("the user views their account information")

public void userViewsAccountInformation() {

// Code to navigate to account information page

}

@Then("the statement balance should be displayed")

public void statementBalanceShouldBeDisplayed() {

// Code to verify statement balance is displayed

}

@Then("the minimum payment due and payment due date should be displayed")

public void minimumPaymentAndDueDateShouldBeDisplayed() {

// Code to verify minimum payment due and due date are displayed

}

@When("the account information service is not responding")

public void accountServiceNotResponding() {

// Code to simulate service not responding

}

@Then("an error message indicating a service issue should be displayed")

public void errorMessageForServiceIssueShouldBeDisplayed() {

// Code to verify error message is displayed

}

}As the chatGPT output states, and as is pretty evident:

- The actual implementation of these steps will depend on your application’s code structure and the tools you’re using for testing. Make sure to adapt the step definitions to match your application’s behavior and the testing libraries you’re using with Cucumber.

Additionally, you’ll need to set up the Cucumber framework and the necessary dependencies in your project to be able to run these BDD tests. The code provided here is a basic example and may need further customization to match your specific testing environment.

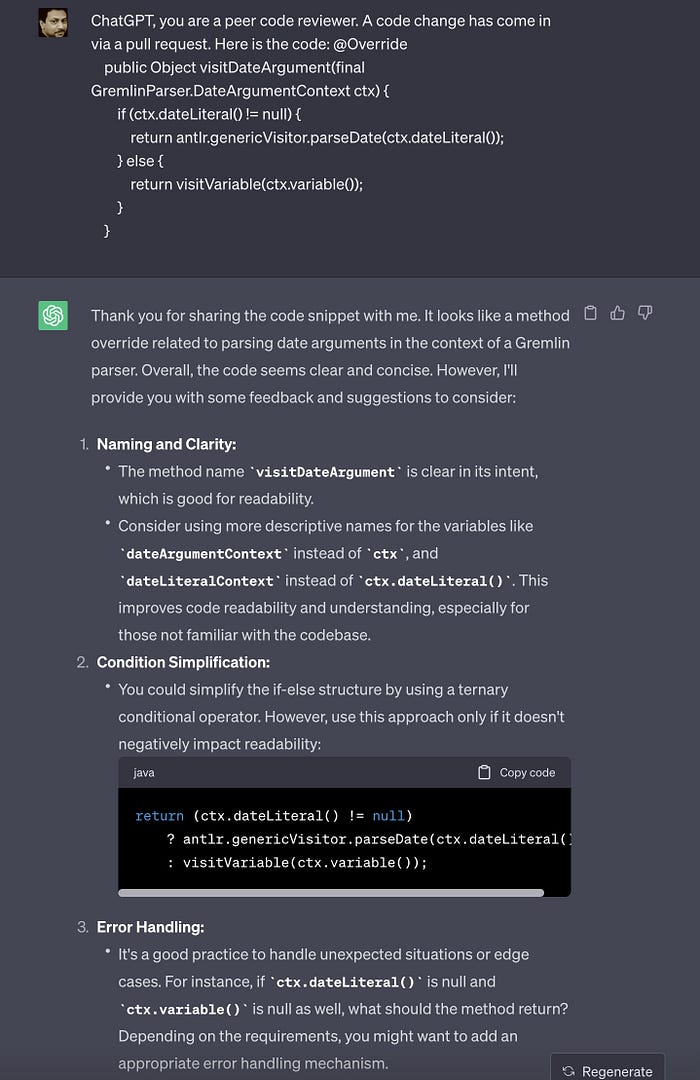

Peer code review with ChatGPT

While not intended to replace a human code reviewer, we can already see the potential of helping a reviewer out. Here is an example, and the potential opportunity for educating developers on how to perform an effective code review:

Isn’t this helpful? The basics of a good code review are suggested and could help a code reviewer with a context specific checklist of things to check in the newly written code. This saves time. ChatGPT also goes on to further suggest how the code could be improved.

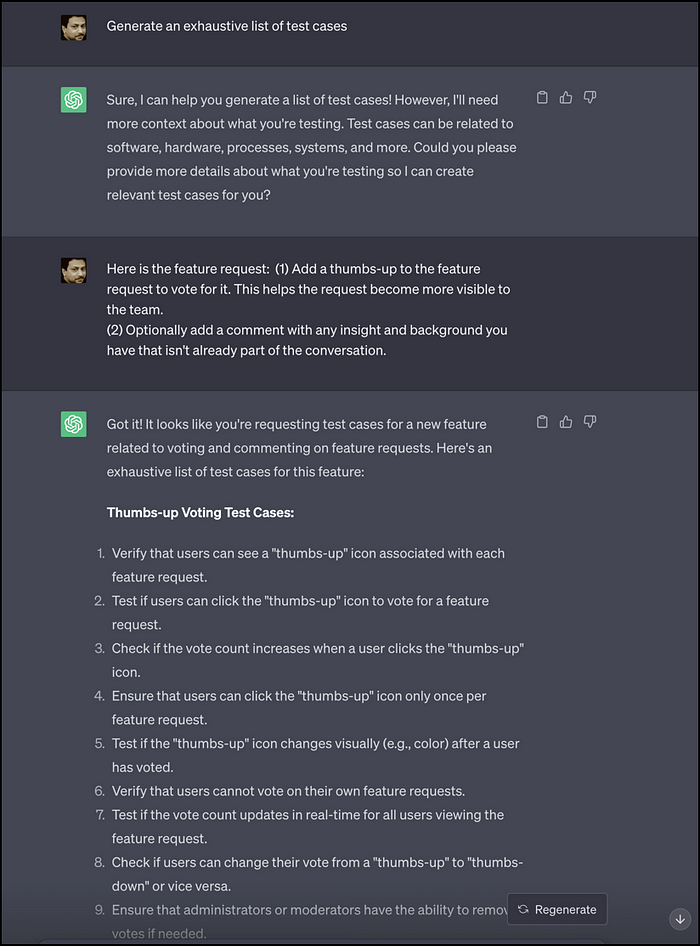

Generate Test Cases

Whether it is Test Driven Development (TDD) or simply writing Unit tests, one of the initial tasks is to produce a list of test cases from the feature requirements. The tests can then be written starting from these lists of tests. Here’s an example of generation of an initial set of test cases. We begin by simply prompting chatGPT with the prompt below:

- Generate an exhaustive list of test cases

Here’s the full output from chatGPT. This list seems exhaustive and includes Non-functional requirement test cases as well!

Thumbs-up Voting Test Cases:

— Verify that users can see a “thumbs-up” icon associated with each feature request.

— Test if users can click the “thumbs-up” icon to vote for a feature request.

— Check if the vote count increases when a user clicks the “thumbs-up” icon.

— Ensure that users can click the “thumbs-up” icon only once per feature request.

— Test if the “thumbs-up” icon changes visually (e.g., color) after a user has voted.

— Verify that users cannot vote on their own feature requests.

— Test if the vote count updates in real-time for all users viewing the feature request.

— Check if users can change their vote from a “thumbs-up” to “thumbs-down” or vice versa.

— Ensure that administrators or moderators have the ability to remove votes if needed.

— Test the behavior of the “thumbs-up” feature on various devices and browsers.

Commenting Test Cases:

— Verify that users can see a comment section associated with each feature request.

— Test if users can type and submit a comment on a feature request.

— Check if submitted comments are displayed correctly with the username, timestamp, and content.

— Ensure that users can edit their own comments after submission.

— Test if users can delete their own comments from a feature request.

— Verify that users cannot edit or delete comments from other users.

— Test if the comment section supports formatting (e.g., bold, italics, bullet points).

— Check if URLs posted in comments are automatically hyperlinked.

— Ensure that long comments are displayed properly without layout issues.

— Test the behavior of the commenting feature on various devices and browsers.

Integration Test Cases:

— Verify that the total vote count accurately reflects the sum of all “thumbs-up” votes.

— Test if the vote count and comments update properly when the page is refreshed.

— Check if users can see the highest voted feature requests listed at the top.

— Ensure that comments and votes are properly synchronized between different clients.

— Test the performance of the feature when there are a large number of votes and comments.

Security and Permissions Test Cases:

— Verify that only authenticated users can vote or comment.

— Test if unauthorized users are restricted from voting or commenting.

— Check if the feature request owner has the ability to moderate comments (edit or delete).

— Ensure that only administrators or authorized users can remove comments.

— Test for potential security vulnerabilities, such as injecting scripts into comments.

Usability and User Experience Test Cases:

— Verify that the “thumbs-up” icon and comment section are intuitively located.

— Test if users receive clear feedback when they vote or submit a comment.

— Check if error messages are displayed appropriately for failed actions (e.g., voting without authentication).

— Ensure that the user’s own comments and votes are visually distinct from others’.

— Test the responsiveness and load times of the feature under various network conditions.

ChatGPT adds a disclaimer at the end of the output: Remember that this list is extensive and might need to be tailored to your specific application and requirements.

Conclusion

Going beyond the IDE, chatGPT could be leveraged as an invaluable additional tool in the software engineer's toolkit for assisting in yet more effective and efficient development practices.

As we’ve seen here, chatGPT can help with boosting the pace of software development by defining the initial structure of test cases that the software engineer can focus on writing the code for, one by one. The time taken to think of different test cases could be reduced significantly, with the initial set of test cases being able to be easily generated with chatGPT.

Code reviews being assisted with prompts can make the job of the code reviewer easier than before. Developers don’t need to wait till the PR stage to get their code reviewed. As a personal productivity tool, the first pass of code reviews could be run by chatGPT even as the code is written incrementally.

Finally, with a ready set of test cases generated by chatGPT, software engineers could get started writing unit tests and uncover scenarios that could otherwise have led to bugs.

We could expect further improvements in the domain of Generative AI and coding. It is 2023, and the field already looks promising using ChatGPT.

Other Interesting Reads